Web streaming data, also known as event stream processing, is a data pipeline design pattern when data points flow constantly from the source to the destination. I literally had no vocabulary for it. Pipeline logic is codified, stored alongside application or infrastructure code and utilizes containerized runners. Web the pipeline design pattern belongs to the family of structural design patterns. An item flows through pipeline, going through multiple stages where each stage performs some task on it.

Pipelineprocessing algorithmstructure design space this pattern is used for algorithms in which data flows through a sequence of tasks or stages. } static <i, o> step<i, o> of(step<i, o> source) { return source; Web in the context of data pipelines, there are several design patterns that can be used to build efficient and reliable pipelines. Web the pipeline pattern, also known as the pipes and filters design pattern is a powerful tool in programming. Interface step<i, o> { o execute(i value);

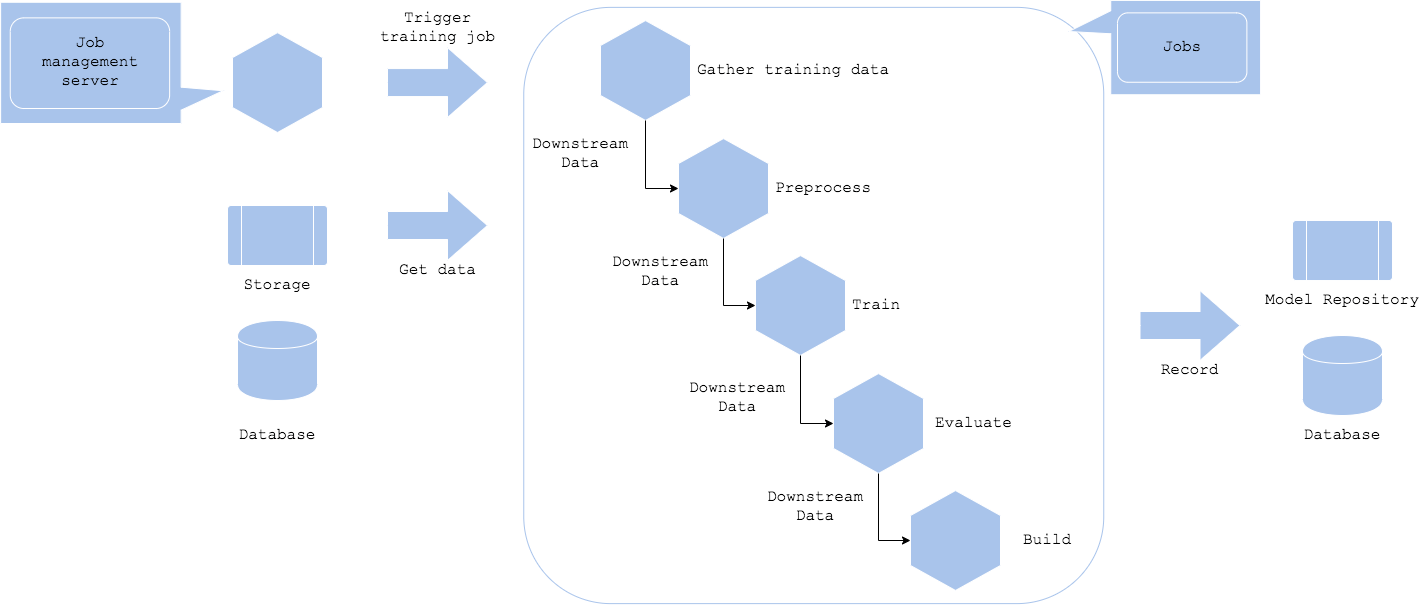

Web typically data is processed, extracted, and transformed in steps. Web pipeline pattern is an assembly line where partial results are passed from one stage to another. Web based on our work and observations from our customers, we have identified 7 pipeline design patterns that we see in many modern tech organizations. It outlines the components, stages, and workflows within the ml pipeline. Although the chain of responsibility and the decorator can handle this task partially.

I literally had no vocabulary for it. It is especially useful when we need to perform multiple transformations or manipulations on our input data. Think of it as a water purifier, water purifiers perform multiple tasks on water in order to. As we saw, these are both very helpful design patterns when building parallel and concurrent applications that require multiple asynchronous processes of data between tasks. Web take a look at these seven pipeline design patterns that enable continuous delivery and improve speed, agility, and quality. Web streaming data, also known as event stream processing, is a data pipeline design pattern when data points flow constantly from the source to the destination. The main power of the pipeline is that it’s flexible about the type of its result. Web this post will cover the typical code design patterns for building data pipelines. Structural design patterns focus on how classes and objects in a system are composed and structured to achieve greater flexibility and modularity. Web collection pipelines are a programming pattern where you organize some computation as a sequence of operations which compose by taking a collection as output of one operation and feeding it into the next. Wikipedia says in software engineering, a pipeline consists of a chain of processing elements (processes, threads, coroutines, functions, etc.), arranged so that the output of each element is the input The main idea behind the pipeline pattern is to create a set of operations (pipeline) and pass data through it. It represents a pipelined form of concurrency, as used for example in a pipelined processor. Pipeline design patterns pipeline design pattern #1: It outlines the components, stages, and workflows within the ml pipeline.

I Literally Had No Vocabulary For It.

Web the pipeline (or chain of responsibility) design pattern is a programming pattern that allows you to chain composable units of code together to create a series of steps that make up an operation. Which design pattern to choose? Therefore, a sequence of data processing stages can be referred to as a data pipeline. These two terms are often used interchangeably, yet they hold distinct meanings.

The Concept Is Pretty Similar To An Assembly Line Where Each Step Manipulates And Prepares The Product For The Next Step.

Interface step

Pipelineprocessing Algorithmstructure Design Space This Pattern Is Used For Algorithms In Which Data Flows Through A Sequence Of Tasks Or Stages.

Web streaming data, also known as event stream processing, is a data pipeline design pattern when data points flow constantly from the source to the destination. An item flows through pipeline, going through multiple stages where each stage performs some task on it. It provides an elegant way to chain different processing steps, where each step handles a specific task and the result is passed to the next step transparently. Web the pipeline pattern is a software design pattern that provides the ability to build and execute a sequence of operations.

Web A Pipeline Design Pattern Is A Software Design Pattern That Process Or Executes A Series Of Steps Or Stages In A Linear Sequence.

It allows you to break down complex tasks into smaller and modular steps or stages that can be executed in order. Web take a look at these seven pipeline design patterns that enable continuous delivery and improve speed, agility, and quality. Web collection pipelines are a programming pattern where you organize some computation as a sequence of operations which compose by taking a collection as output of one operation and feeding it into the next. Which data stack to use?